With the recent fires in California, I’ve been concerned about the air quality as it tells me if I should run, if we should go on our daily walk and if we should leave our doors open like we usually do. The EPA uses air quality sensors around the country to collect data and displays them on the AirNow website. These sensors are very expensive and therefore not placed everywhere. The air quality, of course, can differ depending on where you live and the closest EPA sensor to where I live is about 10 miles away. The EPA has started including data from low cost sensors made by a company called PurpleAir in their fire and smoke map.

With someone’s PurpleAir sensor about 0.5 miles from me, I can get a reasonable view of local air quality. Being the tinkerer that I am, I decided to look into the PurpleAir outdoor air sensor. At $279, it was a little out of my “curiosity price range”. After a little research, I was able to determine what parts are in the PurpleAir sensor. It consists of 2 Plantower PM5003 laser particulate sensors, a BME 280 temperature/pressure/humidity sensor running on an ESP8266 board.

I’ve been experimenting with the NodeMCU microcontroller which is based on the ESP8266, so I was already familiar with parts of the setup. I already have an indoor temperature sensor running on a NodeMCU, so adding a second device shouldn’t be that difficult. On my Home Assistant instance, I’m running the ESPHome add on which makes the ESP modules available to Home Assistant. ESPHome has support for lots of devices including the PM5003 and the BME280 which simplifies the software part of the setup.

In addition to purchasing the PM5003, BME 280 and a Wemos Mini d1 compatible board (ESP8266), I purchased a PVC cap to mount it. Total parts cost was about $45. I followed parts of an online tutorial for wiring things up which meant soldering the PMS5003 power to the 5V on the board, the ground on the sensor to the board and the TX line to D4 on the board. For the BME 280, power went to 3V, SDA to D2 and SCL to D1.

After wiring up the board, I used my trusty Ryobi Hot Glue Gun to glue the pieces into the PVC housing.

I then configured ESPHome. The ESPHome configuration is below:

uart:

rx_pin: D4

baud_rate: 9600

sensor:

- platform: pmsx003

type: PMSX003

pm_1_0:

name: "Particulate Matter <1.0µm Concentration"

filters:

- throttle: 30s

pm_2_5:

name: "Particulate Matter <2.5µm Concentration"

filters:

- throttle: 30s

pm_10_0:

name: "Particulate Matter <10.0µm Concentration"

filters:

- throttle: 30s

- platform: bme280

address: 0x76

i2c_id: bus_a

temperature:

name: "Outside Temperature"

oversampling: 16x

accuracy_decimals: 1

pressure:

name: "Outside Pressure"

accuracy_decimals: 1

humidity:

name: "Outside Humidity"

accuracy_decimals: 1

update_interval: 30s

- platform: dht

pin: D5

temperature:

name: "Outside Temperature Alt"

humidity:

name: "Outside Humidity Alt"

update_interval: 30s

i2c:

sda: D2

scl: D1

scan: True

id: bus_a

In addition to the BME 280 sensor, I added a second temperature/humidity sensor, the DHT22 so that I can compare results as the BME 280 apparently doesn’t have accurate results as the component heats up itself. (I added the DHT22 after the pictures were taken.)

With the sensor setup in ESPHome, the next part was getting the readings converted into an air quality index (AQI). There are various calculations and corrections used to calculate the index. I stuck to a simple calculation that I found in Jason Snell‘s Scriptable widget that works with PurpleAir data.

I’m a big fan of Node-RED and used that to periodically take the data from the sensors and generate an AQI. In Node-RED, I have it poll the sensor once a minute and then calculate the AQI and then update the Home Assistant sensor

[{"id":"90c83f52.90ab4","type":"poll-state","z":"3c8c01a5.14121e","name":"2.5um","server":"d83da4b3.5bea38","version":1,"exposeToHomeAssistant":false,"haConfig":[{"property":"name","value":""},{"property":"icon","value":""}],"updateinterval":"60","updateIntervalUnits":"seconds","outputinitially":true,"outputonchanged":false,"entity_id":"sensor.particulate_matter_2_5um_concentration","state_type":"str","halt_if":"","halt_if_type":"str","halt_if_compare":"is","outputs":1,"x":110,"y":1380,"wires":[["46dfb46e.8c564c"]]},{"id":"46dfb46e.8c564c","type":"change","z":"3c8c01a5.14121e","name":"Set Payload","rules":[{"t":"set","p":"particulate","pt":"msg","to":"payload","tot":"msg"}],"action":"","property":"","from":"","to":"","reg":false,"x":350,"y":1380,"wires":[["54903d05.bb2a04"]]},{"id":"54903d05.bb2a04","type":"function","z":"3c8c01a5.14121e","name":"","func":"function calcAQI(Cp, Ih, Il, BPh, BPl) {\n var a = (Ih - Il);\n var b = (BPh - BPl);\n var c = (Cp - BPl);\n return Math.round((a/b) * c + Il);\n}\n \nfunction getAQIDescription(aqi) {\n\tif (aqi >= 401) {\n\t return 'Hazardous';\n\t} else if (aqi >= 301) {\n\t return 'Hazardous';\n\t} else if (aqi >= 201) {\n\t return 'Very Unhealthy';\n\t} else if (aqi >= 151) {\n\t return 'Unhealthy';\n\t} else if (aqi >= 101) {\n\t return 'Unhealthy for Sensitive Groups';\n\t} else if (aqi >= 51) {\n\t return 'Moderate';\n\t} else if (aqi >= 0) {\n\t return 'Good';\n\t} else {\n\t return undefined;\n\t}\n }\n\nfunction getAQIMessage(aqi) {\n\tif (aqi >= 401) {\n\t return '>401: Health alert: everyone may experience more serious health effects';\n\t} else if (aqi >= 301) {\n\t return '301-400: Health alert: everyone may experience more serious health effects';\n\t} else if (aqi >= 201) {\n\t return '201-300: Health warnings of emergency conditions. The entire population is more likely to be affected. ';\n\t} else if (aqi >= 151) {\n\t return '151-200: Everyone may begin to experience health effects; members of sensitive groups may experience more serious health effects.';\n\t} else if (aqi >= 101) {\n\t return '101-150: Members of sensitive groups may experience health effects. The general public is not likely to be affected.';\n\t} else if (aqi >= 51) {\n\t return '51-100: Air quality is acceptable; however, for some pollutants there may be a moderate health concern for a very small number of people who are unusually sensitive to air pollution.';\n\t} else if (aqi >= 0) {\n\t return '0-50: Air quality is considered satisfactory, and air pollution poses little or no risk';\n\t} else {\n\t return undefined;\n\t}\n }\n\n\n\nvar pm = msg.particulate;\nvar aqi;\n\nif (isNaN(pm)) aqi = \"-\"; \nif (pm === undefined) aqi = \"-\";\nif (pm < 0) aqi = pm; \nif (pm > 1000) aqi = \"-\"; \n /* \n Good 0 - 50 0.0 - 15.0 0.0 – 12.0\n Moderate 51 - 100 >15.0 - 40 12.1 – 35.4\n Unhealthy for Sensitive Groups 101 – 150 >40 – 65 35.5 – 55.4\n Unhealthy 151 – 200 > 65 – 150 55.5 – 150.4\n Very Unhealthy 201 – 300 > 150 – 250 150.5 – 250.4\n Hazardous 301 – 400 > 250 – 350 250.5 – 350.4\n Hazardous 401 – 500 > 350 – 500 350.5 – 500\n */\n\nvar particulateSize;\nvar sensorName;\nvar sensorFriendlyName;\nif (msg.topic.includes('2_5')) { \n particulateSize = \"2.5\";\n sensorName = \"aqi_pm_25\";\n sensorFriendlyName = \"EPA PM 2.5 AQI\";\n\n if (aqi === undefined) {\n\t\tif (pm > 350.5) {\n\t\t\taqi = calcAQI(pm, 500, 401, 500, 350.5);\n\t\t} else if (pm > 250.5) {\n\t\t\taqi = calcAQI(pm, 400, 301, 350.4, 250.5);\n\t\t} else if (pm > 150.5) {\n\t\t\taqi = calcAQI(pm, 300, 201, 250.4, 150.5);\n\t\t} else if (pm > 55.5) {\n\t\t\taqi = calcAQI(pm, 200, 151, 150.4, 55.5);\n\t\t} else if (pm > 35.5) {\n\t\t\taqi = calcAQI(pm, 150, 101, 55.4, 35.5);\n\t\t} else if (pm > 12.1) {\n\t\t\taqi = calcAQI(pm, 100, 51, 35.4, 12.1);\n\t\t} else if (pm >= 0) {\n\t\t\taqi = calcAQI(pm, 50, 0, 12, 0);\n\t\t} else {\n\t\t\taqi = undefined;\n\t\t}\n\t}\n} else {\n particulateSize = \"10.0\";\n sensorName = \"aqi_pm_10\";\n sensorFriendlyName = \"EPA PM 10 AQI\";\n if (aqi === undefined) {\n\t\tif (pm > 425) {\n\t\t\taqi = calcAQI(pm, 500, 301, 604, 425);\n\t\t} else if (pm > 355) {\n\t\t\taqi = calcAQI(pm, 300, 201, 424, 355);\n\t\t} else if (pm > 255) {\n\t\t\taqi = calcAQI(pm, 200, 151, 354, 255);\n\t\t} else if (pm > 155) {\n\t\t\taqi = calcAQI(pm, 150, 101, 254, 155);\n\t\t} else if (pm > 55) {\n\t\t\taqi = calcAQI(pm, 100, 51, 154, 55);\n\t\t} else if (pm >= 0) {\n\t\t\taqi = calcAQI(pm, 50, 0, 54, 0);\n\t\t} else {\n\t\t\taqi = undefined;\n\t\t}\n }\n}\nmsg.payload = {\"aqi\": aqi, \"description\": getAQIDescription(aqi), \"message\": getAQIMessage(aqi), \"particulate\" : particulateSize, \"sensor_name\": sensorName, \"friendly_name\": sensorFriendlyName};\n\nreturn msg;","outputs":1,"noerr":0,"initialize":"","finalize":"","x":560,"y":1380,"wires":[["ba491750.52e4f8"]]},{"id":"ba491750.52e4f8","type":"ha-api","z":"3c8c01a5.14121e","name":"Update Sensor State","server":"d83da4b3.5bea38","debugenabled":false,"protocol":"http","method":"post","path":"/states/sensor.{{payload.sensor_name}}","data":"{\"state\":\"{{payload.aqi}}\",\"attributes\":{\"icon\":\"mdi:chemical-weapon\",\"friendly_name\":\"{{payload.friendly_name}}\",\"description\":\"{{payload.description}}\",\"particulate_size\":\"{{payload.particulate}}\",\"unit_of_measurement\":\"AQI\",\"message\":\"{{payload.message}}\"}}","dataType":"json","location":"none","locationType":"none","responseType":"json","x":800,"y":1380,"wires":[[]]},{"id":"d83da4b3.5bea38","type":"server","z":"","name":"Home Assistant"}]

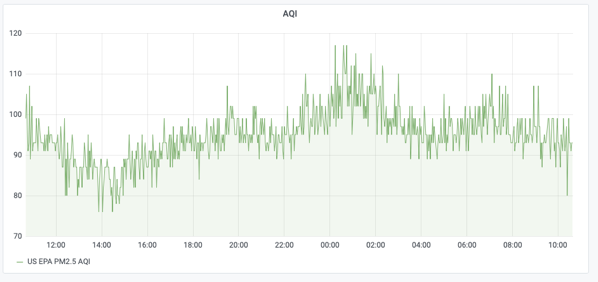

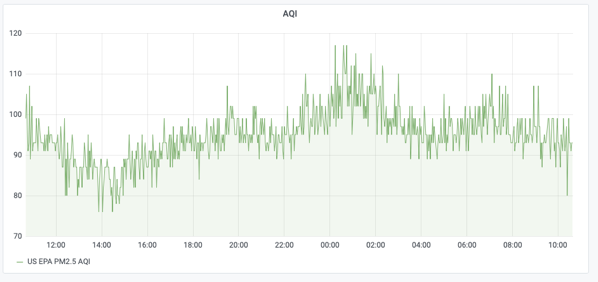

Having the AQI sensor in Home Assistant allows me to quickly glance and see how bad the air is outside (at this point, I can actually see the poor air!).

Good air quality is less than 50 and from the graph above, we haven’t seen that in awhile!

I mounted my finished product under a second floor deck which should keep the major rain out of it. PurpleAir recommends not covering the bottom with anything, so I’m going to go with that and see what happens. Having it completely exposed outside isn’t great. It is powered by a PoE to USB adapter as I had Ethernet going outside there anyway.

The AQI data is interesting and is actually useful in telling me how much physical activity I should do. Other pieces of data I collect are neat, but not all that useful.