-

Dealing with the influx of scooters

I try to get out and run 3-4 times a week down by Mission Bay as there is a nice path and I don't have to be afraid of vehicle traffic. I used to run on the sidewalk where there was one and on dirt when there wasn't; however with traffic whizzing by at 55 mph (speed limit is 45 mph), I got smart and decided that I'd just drive to a nice place and run. Last year on one of my runs, I noticed electric scooters parked in groups along the path. Over the course of the next few months, the scooters started appearing just about everywhere I went in the city.

The scooters are an interesting solution to the last mile problem and appear to be useful for a lot of people. However, the companies that are running the scooters have taken the approach that they'll just "disrupt" transportation and simply do what they want and deal with the fallout and laws later. This has been a big topic on the news with injuries happening all the time, lawsuits (currently San Diego is facing a lawsuit about disabled access on the sidewalks), and some riders disobeying laws.

The San Diego mayor and city council have been working on ways to handle these scooters so that they can co-exist with everyone in the city. While this may seem like the right thing to do, I'd argue that instead of spending time trying to handle these scooters, how about taking a look at the problems they are causing and what laws already exist to handle them.

In my view, there are a number of issues that I've seen:

- Scooters are parked on the sidewalk either by the companies (or their contractors) or the riders.

- Scooters are being ridden on the sidewalk and the riders are getting into accidents with innocent pedestrians.

- Scooter riders are riding in the street in the wrong direction and not stopping at traffic lights and/or stop signs.

- Parent and child riding on a scooter.

- Kids riding the scooters.

The scooters, themselves, aren't the problem in my opinion. It is the riders (mostly) that don't know what they are supposed to do or frankly don't care.

Let's take a closer look at my list.

Scooters parked on sidewalks

This is already illegal under California Vehicle Code 21235:

(i) Leave a motorized scooter lying on its side on any sidewalk, or park a motorized scooter on a sidewalk in any other position, so that there is not an adequate path for pedestrian traffic.

While people may argue what is an adequate path, unless the sidewalk is really wide, a scooter on the sidewalk won't allow 2 people to pass one another comfortably.

Scooters ridden on the sidewalk

This is already illegal under CVC 21235:

(g) Operate a motorized scooter upon a sidewalk, except as may be necessary to enter or leave adjacent property.

If we consider the path around Mission Bay a bike path and not a sidewalk (scooters can be ridden on bike paths), San Diego Municipal Code §63.20.7 states:

Driving Vehicles On Beach Prohibited; Exceptions; Speed Limit On Beach

(a) Except as permitted by the Director and except as specifically permitted on Fiesta Island in Mission Bay, no person may drive or cause to be driven any motor vehicle as defined in the California Vehicle Code on any beach, any sidewalk or turf adjacent thereto; provided, however, that motor vehicles which are being actively used for the launching or beaching of a boat may be operated across a beach area designated as a boat launch zone. OriginalA scooter is defined as a motor vehicle under California Vehicle Code and the path around Mission Bay is adjacent to a beach thereby making it illegal to ride a scooter on the path.

CVC 21230 states:

Notwithstanding any other provision of law, a motorized scooter may be operated on a bicycle path or trail or bikeway, unless the local authority or the governing body of a local agency having jurisdiction over that path, trail, or bikeway prohibits that operation by ordinance.

Meaning that San Diego (as they have done) can regulate scooters on bike paths.

Scooters ridden recklessly

As scooter riders must have a driver's license (or permit) and scooter are classified as motor vehicles, the riders must follow all the rules of the road including which direction they ride on the street, stopping at stop signs or traffic lights, yielding, etc. This is already covered under California Vehicle Code.

Parent and child riding on a scooter

Again, illegal under CVC 21235:

(e) Operate a motorized scooter with any passengers in addition to the operator.

(c) Operate a motorized scooter without wearing a properly fitted and fastened bicycle helmet that meets the standards described in Section 21212, if the operator is under 18 years of age.

Kids riding the scooters

Illegal under CVC 21235:

(d) Operate a motorized scooter without a valid driver’s license or instruction permit.

As I wrote in the beginning, I don't have a problem with the scooters if they are operated in a safe and respectful manner (just like driving). I do, however, have a major problem with scooters blocking the sidewalk when parked and riders zipping by me when I'm walking on the sidewalk. In addition, driving is already dangerous enough without having to take into account a scooter rider on the road not obeying the law.

Instead of trying to add more regulations for scooters, how about the city enforce the current laws on the books? This would go a long way at solving the problems. The companies that operate the scooters could possibly do more to make their riders understand how to properly operate them. As much as I'd like to blame these companies, it is the riders that are causing the problems. The companies, however, need to stage their scooters in appropriate locations to not block sidewalks and need to pick them up in a reasonable amount of time as they look like trash scattered all over.

I am not a lawyer and this is not legal advice. This article is based on my interpretation of the laws.

-

Automating my TV

One of the lazy things that I've tried to do was have the Amazon Echo turn my TV on and off. When I had Home Assistant running on my Raspberry Pi, I used a component that controlled the TV and Apple TV via HDMI CEC. Unfortunately it wasn't quite reliable and I lost the ability to use it when I migrated to a VM for Home Assistant.

In a recent release of Home Assistant, support was added for Roku and since I have a TCL Roku TV, I decided to give it a try. The component itself works, but has a few major limitations for me. First off it initializes on Home Assistant startup. In order to conserve a little energy, I have my TV, Apple TV, and sound bar on a Z-Wave controlled outlet. The outlet doesn't turn on until the afternoon, so most of the time when Home Assistant restarts (I have it restart at 6 am so that my audio distribution units initialize as they also turn off at night), the TV isn't turned on. The second issue has to do with the TV going to sleep. It has a deep sleep and a fast start mode; fast start uses more energy, so I leave it off. The Roku component uses HTTP commands to control the device or TV; when the TV is in deep sleep, it doesn't respond to HTTP commands. This, of course, makes it impossible to turn on the TV with the component.

After thinking about this problem for awhile, I came up with some Node-RED flows to turn on the TV and handle status updates. The TV, it turns out, responds to a Wake-On-LAN packet as I have it connected via Ethernet and Home Assistant has a WOL component that lets me send the packet.

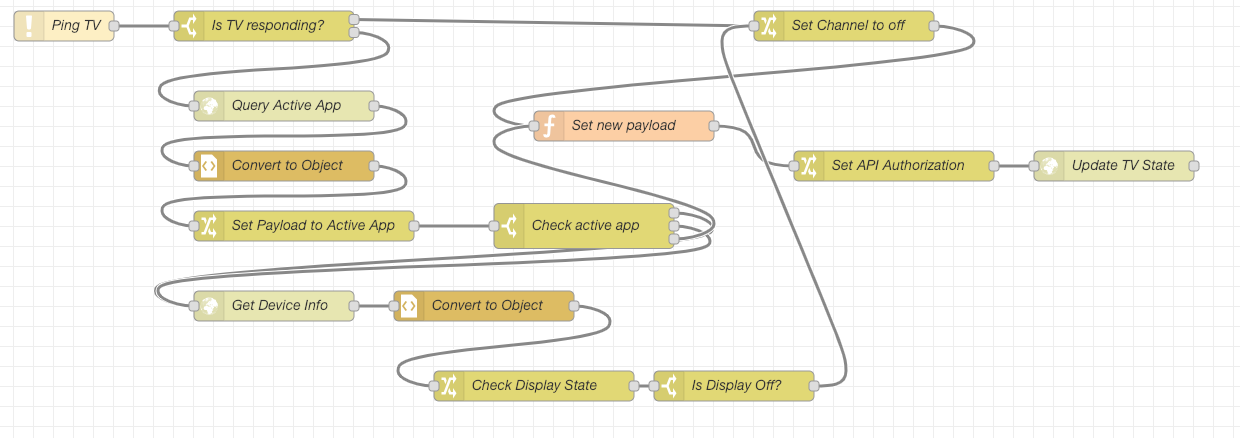

My flow to check on the TV state is a bit complicated.

- First it pings the TV. The ping is done every 10 seconds.

- If the TV responds, it sends an HTTP request to the TV.

- When the response comes back, it is parsed, the current application running is checked. This also lets me know what Roku channel is currently active. I have noticed that my TV reports that the Davinci Channel is active when I turn the TV off, so I special case that.

- If the channel is not null and not the Davinci Channel, I then send a command to check to see if the display is off.

- After I figure out the app and if the display is off, I craft a new payload with the current channel in it.

- The payload is then sent in an HTTP request back to Home Assistant's HTTP Sensor API

- If the TV doesn't respond to the ping, I set the payload to off and then send the state to the Home Assistant API.

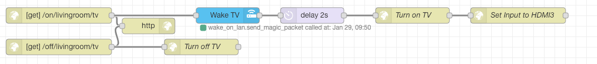

Turning on the TV is a bit less complicated.

- Send WOL packet to TV.

- Pause.

- Send HTTP command to turn on TV.

- Send HTTP command to set input to HDMI3 (my Apple TV).

Turning off the TV is even easier.

- Send HTTP command to turn off TV.

When I turn on the TV outlet, the state of the TV gets updated pretty quickly as the ping command from above is running every 10 seconds.I've posted the Node-RED flows below that can be imported and modified for your situation.

-

Adding Energy Monitoring to Home Assistant

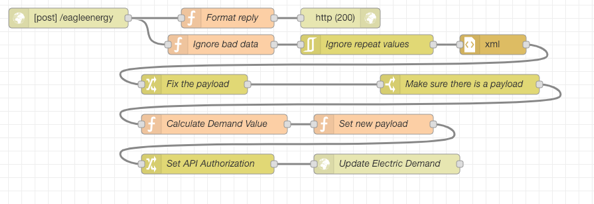

Now that I have Home Assistant running pretty well, I've started seeing what else I can add to it. There are several components for monitoring energy usage, but sadly none for my Rain Forest Automation Eagle Energy device. After a quick search, I found a Node-RED flow that looked promising. It would query the local API of the device (or the Cloud one) and give me an answer. The next step was seeing how to get that into Home Assistant. I found the HTTP Sensor which would let me dynamically create sensors. (There is so much to explore in Home Assistant, it will keep me entertained for awhile.)

With all the pieces in place, I set the Node-RED flow to repeat every 15 seconds and then use the Home Assistant API to add the usage. This worked well for a few hours, but then I stopped receiving updates only to discover that the Eagle device had stopped responding. When I checked the developer documentation, it indicated that the local API was not supported for my older device. However, an Uploader API was available that would push the data to me. That sounded interesting, so I created a flow in Node-RED that listened for a connection and then parsed the data using the flow I found before. The only problem was how do I get the device to push me the data. The Eagle device has options for cloud providers, but something I missed before is that there is the ability to add a new one. I added my Node-RED install using: http://10.0.3.100:1880/eagleenergy and the data starting flowing!

So far this new method has been working for almost 24 hours, so I have a lot more confidence that this will keep working.

The Node-RED flow is below:

[{"id":"b7dc8932.f85388","type":"xml","z":"15825822.ae8e1","name":"Convert to Object","property":"payload","attr":"","chr":"","x":310,"y":1440,"wires":[["21269998.3f97e6"]]},{"id ":"c85cb9e0.0ba818","type":"http request","z":"15825822.ae8e1","name":"Query Active App","method":"GET","ret":"txt","url":"http://10.0.3.41:8060/query/active-app","tls":"","x":310,"y": 1380,"wires":[["b7dc8932.f85388"]]},{"id":"b35dc59a.083738","type":"ping","z":"15825822.ae8e1","name ":"Ping TV","host":"10.0.3.41","timer":"10","x":90,"y":1300,"wires":[["e2decb33.dc36e8"]]},{"id":"e2decb33. dc36e8","type":"switch","z":"15825822.ae8e1","name":"Is TV responding?","property":"payload","propertyType":"msg","rules":[{"t":"false"},{"t":"else"}]," checkall":"true","repair":false,"outputs":2,"x":290,"y":1300,"wires":[["6b2354bd.8306ec"],["c85cb9e0 .0ba818"]],"outputLabels":["","Is awake?"]},{"id":"a59cc790.158f68","type":"http request","z":"15825822.ae8e1","name":"Update TV State","method":"POST","ret":"txt","url":"https://homeassistant.com:8123/api/states/sensor.tv_state" ,"tls":"","x":1140,"y":1440,"wires":[[]]},{"id":"208bd98e.9ed806","type":"change","z":"15825822. ae8e1","name":"Set API Authorization","rules":[{"t":"set","p":"headers","pt":"msg","to":"{\"Authorization\":\"Bearer INSERT_BEARER\"}","tot":"json"}],"action":"","property":"","from":"","to":"","reg":false,"x":920,"y" :1440,"wires":[["a59cc790.158f68"]]},{"id":"21269998.3f97e6","type":"change","z":"15825822.ae8e1"," name":"Set Payload to Active App","rules":[{"t":"set","p":"payload","pt":"msg","to":"payload.active-app.app[0]._","tot":"msg"}]," action":"","property":"","from":"","to":"","reg":false,"x":330,"y":1500,"wires":[["6d6fdcd0.004714"] ]},{"id":"71eea783.5158f8","type":"function","z":"15825822.ae8e1","name":"Set new payload","func":"var channel = msg.payload\nif (channel == \"Davinci Channel\") {\n channel = \"Idle\"\n}\nvar newPayload = {\"state\":channel,\"attributes\":{\"icon\":\"mdi:television\", \"friendly_name\":\"TV\"}}\nmsg.payload = newPayload\n\nreturn msg;","outputs":1,"noerr":0,"x":650,"y":1400,"wires":[["208bd98e.9ed806"]]},{"id":"6b2354bd.8306ec", "type":"change","z":"15825822.ae8e1","name":"Set Channel to off","rules":[{"t":"set","p":"payload","pt":"msg","to":"off","tot":"str"}],"action":"","property":"" ,"from":"","to":"","reg":false,"x":870,"y":1300,"wires":[["71eea783.5158f8"]]},{"id":"ee5484a0. bf69c8","type":"http request","z":"15825822.ae8e1","name":"Get Device Info","method":"GET","ret":"txt","url":"http://10.0.3.41:8060/query/device-info","tls":"","x":300,"y ":1580,"wires":[["dfba9256.d0175"]]},{"id":"6d6fdcd0.004714","type":"switch","z":"15825822.ae8e1"," name":"Check active app","property":"payload","propertyType":"msg","rules":[{"t":"eq","v":"Davinci Channel","vt":"str"},{"t":"null"},{"t":"else"}],"checkall":"true","repair":false,"outputs":3,"x":610 ,"y":1500,"wires":[["ee5484a0.bf69c8"],["ee5484a0.bf69c8"],["71eea783.5158f8"]],"outputLabels":[" Davinci Channel","Null",""]},{"id":"dfba9256.d0175","type":"xml","z":"15825822.ae8e1","name":"Convert to Object","property":"payload","attr":"","chr":"","x":510,"y":1580,"wires":[["37ef8802.728648"]]},{"id ":"37ef8802.728648","type":"change","z":"15825822.ae8e1","name":"Check Display State","rules":[{"t":"set","p":"payload","pt":"msg","to":"payload.device-info.power-mode[0]","tot":" msg"}],"action":"","property":"","from":"","to":"","reg":false,"x":560,"y":1660,"wires":[["adaad7e0. 489ba8"]]},{"id":"adaad7e0.489ba8","type":"switch","z":"15825822.ae8e1","name":"Is Display Off?","property":"payload","propertyType":"msg","rules":[{"t":"eq","v":"DisplayOff","vt":"str"}]," checkall":"true","repair":false,"outputs":1,"x":760,"y":1660,"wires":[["6b2354bd.8306ec"]]}] -

All In with Home Assistant

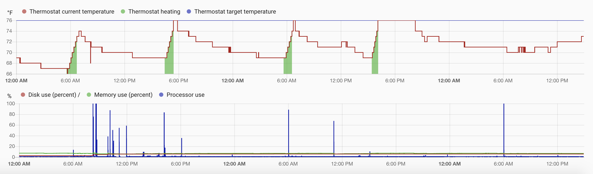

I've spent parts of the last 9 months playing with Home Assistant and have written about some of my adventures. A few weeks ago, I finally decided to go all in with Home Assistant and ditch my Vera. I bought an Aeotec Z-Stick Gen5 Z-Wave dongle and starting moving all my devices over to it. Within a few days, I had all my devices moved over and unplugged my Vera. Everything was running great on my Raspberry Pi B, but I noticed that the History and Logbook features were slow. I like looking at the history to look at temperature fluctuations in the house.

I had read that switching from the SQLite database to a MySQL database would speed things up. So I installed MariaDB (a fork of MySQL) on my Raspberry Pi and saw a slight increase in speed, but not much. Next was to move MariaDB to a separate server using Docker. Again, a slight increase in speed, but it still lagged. At this point everything I read pointed to running Home Assistant on an Intel NUC or another computer. I didn't want to invest that kind of money in this, so I took a look at what I had and started down the path of installing Ubuntu on my old Mac mini which was completely overkill for it (Intel Quad Core i7, 16 GB RAM, 1 TB SSD). Then I remembered that I had read about a virtual machine image for Hass.io and decided to give that a try.

After some experimenting, I managed to get Home Assistant installed on a virtual machine running in VMWare on my Mac Pro. (A few days after I did this, I saw that someone posted an article documenting this.) I gave the VM 8 GB of RAM, 2 cores (the Mac Pro has 12) and 50 GB of storage. Wow, the speed improvement was significant and history now shows up almost instantly (the database is running in a separate VM)! I was so pleased with this, I decided to unplug the Raspberry Pi and make the virtual machine my home automation hub. There were a few tricks, however. The virtual machine's main disk had to be setup as a SATA drive (the default SCSI wouldn't boot), suspending the VM confused it, and the Z-Wave stick wouldn't reconnect upon restart. After much digging, I found the changes I needed to make to the .vmx file in the virtual machine:

suspend.disabled = "TRUE" usb.autoConnect.device0 = "name:Sigma\ Designs\ Modem"(The USB auto connect is documented deep down on VMWare's site.)

I've rebooted the Mac Pro a few times and everything comes up without a problem very quickly, so I'm now good to go with this setup. Z-Wave takes about 2.5 minutes to finish startup vs. 5 or 6 on the Pi. A friend asked if I was OK with running a "mission critical" component on a VM. I said that I was because the Mac Pro has been rock solid for a long time and my virtual machines have been performing well. I could change my mind later on, but I see no reason to spin up another machine when I have a perfectly overpowered machine that is idle 95% of the time.

What next? Now that I have more power for my automation, I may look at more pretty graphs and statistics. I may also just cool it for awhile as I've poured a lot of time into this lately to get things working to my satisfaction. This has definitely been an adventure and am glad that I embarked on it.