I’ve been using my EdgeRouter Lite for more than 6 months now and couldn’t be happier with it. After posting my review, Ubiquiti contacted me and asked if I was interested in testing out some new hardware. As I love playing with new hardware, I couldn’t say no. I was actually eyeing the 802.11 ac access points, but the price tag put me off as I didn’t need a new wireless access point; my Time Capsule has been working fine in bridge mode providing coverage throughout my house pretty well.

Ubiquiti sent me a UniFi AP AC Lite and UniFi AP AC LR for testing. Both units are basically identical with the LR providing better range and potentially better throughout on the 2.4 GHz range. I’m going to focus on the LR device as the price difference ($89 vs $109) is so low, that for the home and small business use, the LR is a no brainer when compared to the Lite (the Lite is also a bit smaller which could make it fit in better on the ceiling in a home).

Most home users purchase an off the shelf router such as the Apple Time Capsule which includes a router as well as a WiFi access point. This serves most people’s needs, however some people find that they need additional access points to fill in the dead spots in their homes. In order to do this, they either use repeaters or additional routers in bridge mode. This is basically wasting a large portion of the router. While this isn’t what I’m doing because I didn’t need to fill in gaps in coverage, I was quite intrigued about a WiFi access point that simply did WiFi. In addition, the UniFi access points are Enterprise grade access points which means (to me) that they’re highly reliable and highly configurable.

When I first opened the AC Lite (I tested it first), it had the access point, a mounting bracket, and a PoE injector in it. A PoE injector allows power to be supplied over Ethernet; this means that only 1 Cat6 cable goes to the access point and the injector is placed close to the switch and plugged into a power strip. The first thing that disappointed me about this access point is that it didn’t use the 802.3af PoE standard which would have allowed me to connect it directly to my Linksys PoE+ Switch. When I asked Ubiquiti about this, I was told that a lot of their customers are price conscious and when deploying a lot of devices, the cost difference can be significant. In these cases, their customers use the UniFiSwitch which provides passive PoE (like the injector). For my testing setup, I simply turned the access point upside down (nose pointing down) on a high shelf. For permanent installation they should be mounted on a ceiling (the docs indicate they can also be wall mounted, but based on the antenna design, ceiling mounting will be better). If I had known about access points that were this cost effective and could be PoE powered, I would definitely have run extra Cat 6 to central places in the ceilings. Anyone that is remodeling and putting in Ethernet cable should throw in a few extra runs in the ceilings to mount access points; even if they aren’t UniFi access points, some type of PoE access point could easily be installed.

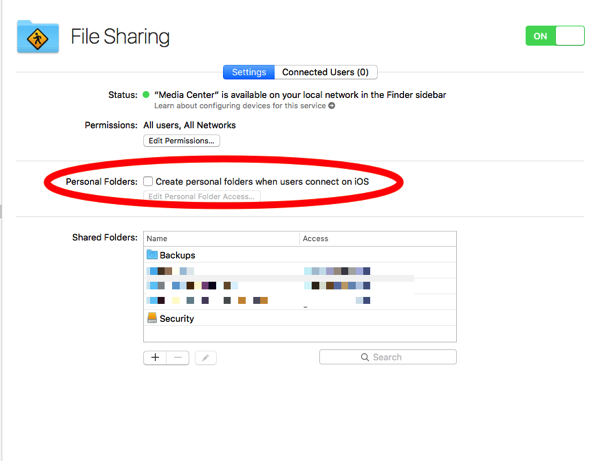

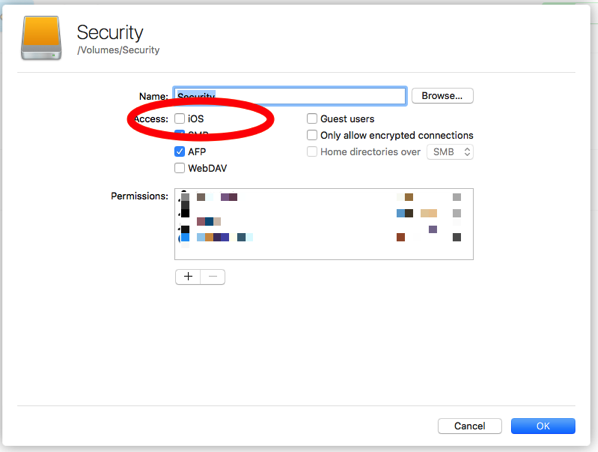

The second step was to install the UniFi Controller software on my server. The software is used for initial setup (they also have an Android app and an iOS app that onfigures the access point), monitoring and ongoing maintenance of one or more access points as well as some of the other products in the UniFi line. The controller is a Java app and installed without too many problems. If installing on OS X Server, I recommend modifying the ports that it uses by going to ~/Library/Application Support/UniFi/data/system.properties and change the ports; OS X Server likes to run the web server on the standard port even if you turn off websites. Note that you have to run UniFi Controller once to create this file. In addition, when modifying the file make sure that there is nothing on the line following the port such as a comment as that will prevent the file from being read. (After spending 30 minutes trying to figure this out, I found a forum post with this information in it.)

I don’t like Java apps for daily use, but for server use, I have no objections to them (I also run Jenkins and it runs well without bogging down the machine). After installing the controller, I wanted to use my own SSL certificate (I’m not a huge fan of accepting self-signed SSL certificates). I setup an internal hostname for the machine and then using my SSL certificate in a pem file:

openssl pkcs12 -export -in server.pem -out ~/Desktop/mykeystore.p12 -name "unifi"

cd /Users/mediacenter/Library/Application Support/UniFi/data

keytool -importkeystore -srckeystore ~/Desktop/mykeystore.p12 -srcstoretype PKCS12 -srcstorepass aircontrolenterprise -destkeystore keystore -storepass aircontrolenterprise

(This requires restarting the controller software.)

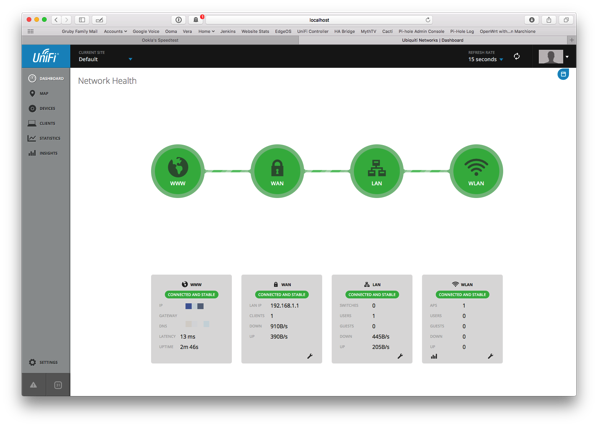

Once I had the controller software installed, I went to a browser and connected to port 8443 on my server. The controller software walks you through the simple setup and the access point is up and running. I don’t recommend stopping here as there are a number of options to setup to take full advantage of the access point.

The controller interface is very utilitarian, but in my opinion is not easy to use. For the basic access point, it shows a lot of stuff that is irrelevant and can’t be turned off. The good news is that the controller software isn’t used all that often. I spent a bit of time experimenting with the interface to get what I wanted. First off, I wanted separate 2.4 and 5 GHz networks. If you have one SSID for both 2.4 GHz and 5 GHz, Apple devices pick the frequency with the better signal and this tends to be the 2.4 GHz and won’t jump over to 5 GHz automatically. I found a reference to an Apple technote describing the behavior. By separating out the 2.4 GHz and 5 GHz networks, you can explicitly select the frequency. (Apparently the band steering option in the UniFi access points is supposed to help with that.) Next up was a guest network. While the controller can setup a guest network and portal mode, this turns on QoS (Quality of Service) and actually degrades performance even if no one is connected to the guest network. This was not acceptable to me, so I just created a separate SSID and told it to use VLAN 1003 and used what I wrote about before to separate out the traffic. While I would have liked to use the built in guest network and play with the portal, I rarely have people using the guest network so the tradeoff wasn’t worth it for me.

There are also settings for controlling power and bands for the router, but the default settings work for me.

So now that everything was setup, the next question was “do they work?” Well, it’s pretty hard for access points not to work! I setup the networks separate from my Time Capsule so that I didn’t subject my household to my testing and put my devices on it. Would my devices stay connected? Did the access point have hiccups and require rebooting? How was the performance of it?

I’ve been testing with my 2012 MacBook Pro, iPhone 6 and iPhone 6s, and iPad Mini 2. The iPhone 6 and 6s do 802.11ac, the iPad Mini 2 does 802.11n, and the MacBook Pro does 802.11n. I’ve found that the MacBook Pro consistently stays connected on the 5 GHz network (preferred network) and usually negotiates at 300 Mbps. Using iperf connecting to a local server, I get 150-200 Mbps. That’s not too shabby. The connection is rock solid and I don’t see the MacBook Pro switching to the 2.4 GHz network. Using the iPad Mini 2, I can stay connected to the 2.4 GHz network, but the Mini seems to require me to toggle WiFi periodically to see all the networks including the 5 GHz network. I have no idea why, but not an access point issue. When I use the 2.4 GHz network, I can get 50-60 Mbps and on the 5 GHz network, I can get 110-140 Mbps. My iPhone has no problem with the 5 GHz network and gets 100-110 Mbps. (I used iPerf3 on iOS to do the measurements. iPerf3 has an awful user interface, but it does work.) I saw similar, if not better performance with my Apple Time Capsule. Indications from reading the forum is that these access points have trade offs for supporting more users vs higher performance on a small number of users like in my situation. However, the performance is more than acceptable given that I currently have a 100 Mbps Internet connection and the only time I could exceed that is hitting my internal network.

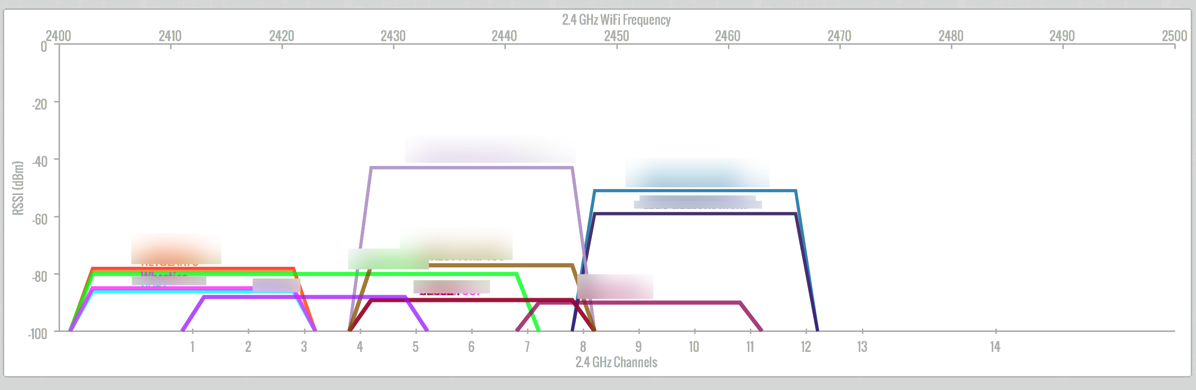

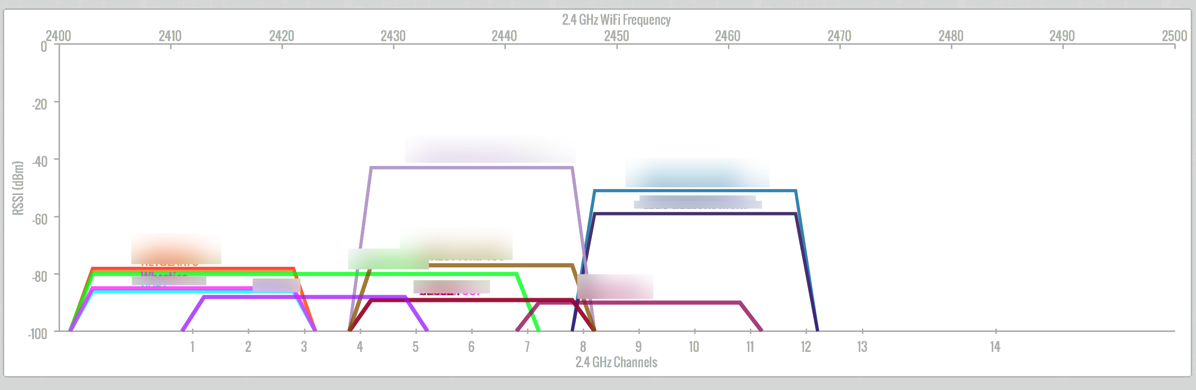

While I don’t live in a condo or a multi-unit dwelling with units stacked on top of other, I do live in an area with crowded airwaves. The 2.4 GHz frequency as you can see below has a few peaks (my networks) and a lot of access points. Performance on the 2.4 GHz is acceptable and since I don’t normally run speed tests is more than adequate for my 50 Mbps downstream cable modem connection (for now until I get 200 Mbps hopefully next month).

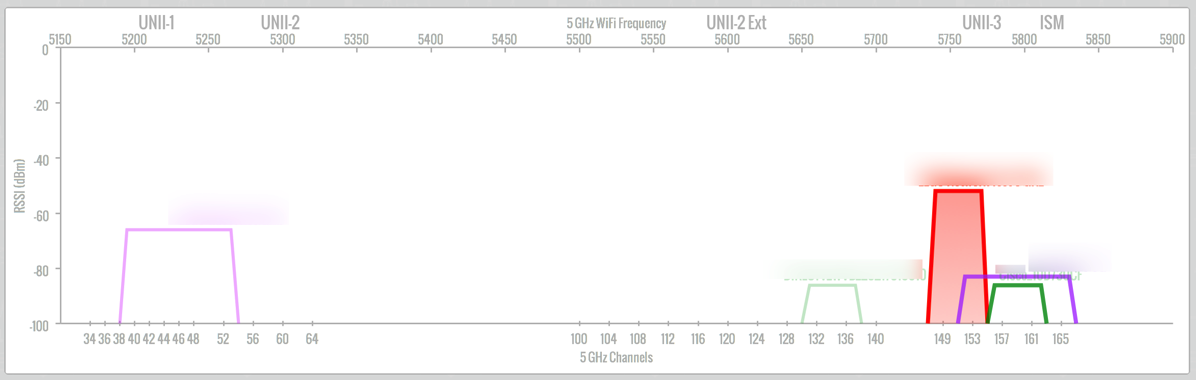

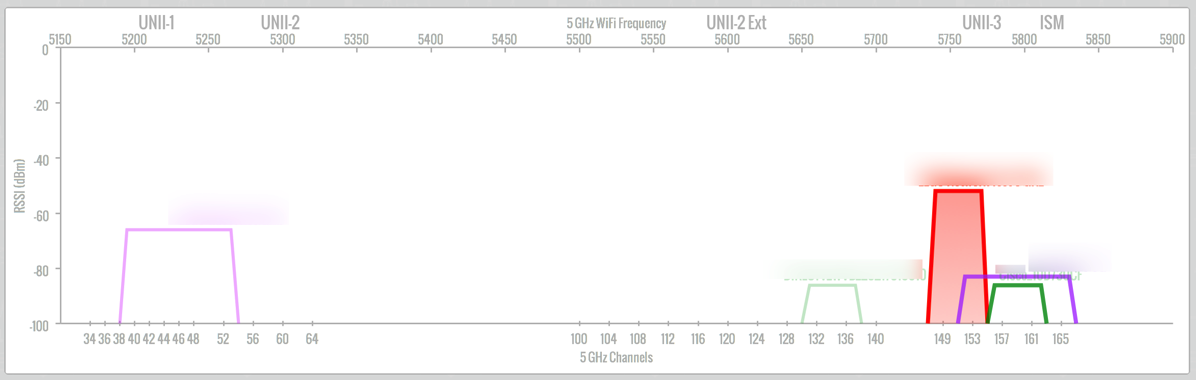

The 5 GHz frequency is a lot less crowded which is why I try to get my devices on it at all costs (I’m tempted to have the devices forget the 2.4 GHz network, but I suspect that will cause more problems).

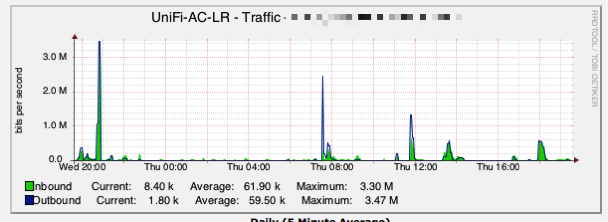

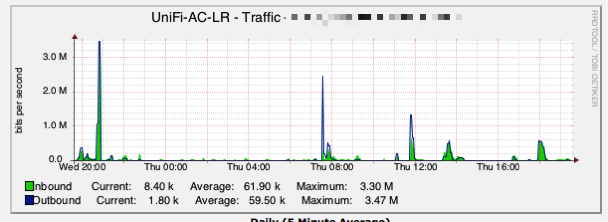

Since I love statistics, I turned on SNMP in the UniFi controller (it actually tells the access point to turn on SNMP and monitoring is done by connecting to the AP and not the controller), and setup Cacti to monitor traffic. There, of course, is very little use in me monitoring traffic on my network, but I’m always curious about network performance and utilization. However, the graphs do tell me that very, very rarely do I ever see bandwidth spikes above 50 Mbps.

This access point is definitely a step up from consumer grade router/access point combos. It is extremely flexible, cost effective, and unobtrusive (I forgot to mention that it looks like a smoke detector). I’ve been so happy with my EdgeRouter Lite and this access point, that I have already purchased a UniFi AP AC Pro to see how that will perform.

Pros

- Highly configurable

- Easy to install

- PoE for placement with just an Ethernet cable

- Unobtrusive

- SNMP capable

- Decent performance in the single user environment

- Low cost

Cons

- Lite and LR units use passive PoE instead of 802.3af

- Controller software is a bit cumbersome to use

- Not all advertised features are currently available such as band steering and airtime fairness

- Guest portal and rate limiting options drastically affect performance

Summary

While the UniFi access points are designed for enterprises, they are a great addition to the EdgeRouter Lite. If anyone has a little time to setup an access point and can deal with the not so consumer friendly controller software, I would definitely recommend this line of access points. If you’re OK with the 3×3 MIMO on 2.4 GHz and 2×2 MIMO on 5 GHz vs 3×3 on 5 GHz, than the LR access point is probably the better bang for your buck. The Lite for the home network where $20 isn’t going to break the bank may not a great choice, unless the smaller size is attractive due to mounting. In my case, I’ll be mounting 1 access point behind my TV and 1 in my office, so no one will see them. If you’re like me and the lack of the 802.3af PoE bothers you, than the Pro access point is the way to go. Since I already have a PoE switch (actually 2 of them and neither is a Ubiquiti switch that provides passive PoE), having to use an unsightly injector (which uses an extra power outlet) doesn’t excite me.

The Ubiquiti forums provide a wealth of information for the tinkerer. Ubiquiti staff is very helpful and provide lots of answers (as do community members). The controller software and AP firmware is being updated all the time which is very exciting; I don’t need new features, but a fresh UI and more options (such as being able to turn off the LED not just using a command line) would be nice.

For better coverage, getting at least 2 access points would go a long way to having full coverage in a house. While 1 will get me coverage bars all over my house, a second one will give me better performance and not just bars of coverage. Once I get the Pro unit, I’ll be able to space out my access points.

Most home users just accept mediocre WiFi coverage and buy into the marketing of many router/access points that say that they’re access points perform better than others. The problem really is that the access point can have higher transmit power (based on the maximum allowed), but really if your device can’t connect or have good WiFi performance, it doesn’t help. More access points are going to provide better, more consistent coverage. The UniFi access points do that quite well at a reasonable price.

NOTE: Test units were provided to me at no cost from Ubiquiti Networks. However, that didn’t influence the results of this review and no conditions were placed on what I wrote about the units.